Reactive Configuration¶

In the context of multi-tenancy, one of the most challenging problems is to communicate changes (addition/updation/deletion) that occur to tenant configuration across all running instances of the app server especially in a production environment which is capable of auto-scaling, without doing a graceful restart of the running stack.

Now there are three major events that the running app server processes/containers need to be notified of:

-

Add tenant => This event would be sent when a new tenant is on-boarded to the platform. The payload would contain the newly spun up DB/cache config for that tenant.

-

Update tenant => This event would be sent when a tenant configuration such as DB/cache configuration for a specific tenant has ben updated.

-

Delete tenant => This event would be sent when a tenant exits the platform.

Upon receiving any of the above mentioned events, each app server process/container needs to update its respective in-memory configuration in order to process subsequent requests appropriately.

This leads to the formulation of the following two problems:

- How do all running processes/containers receive the event ?

- Once the event is received, when should it get processed ?

Pub/Sub¶

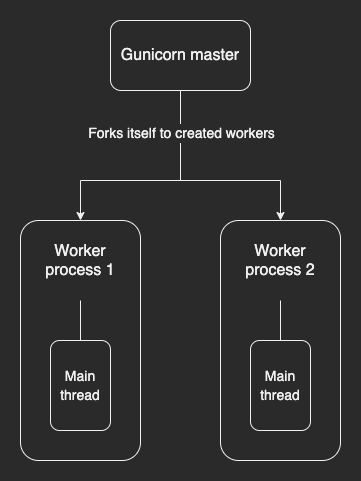

In order to address the first question, consider the following simplistic server architecture:

The problem here is fairly straightforward. One of the processes would publish an event and the other processes, including the publisher, would have to receive this event.

The solution majorly involves the following two components:

- A Pub/Sub provider (like Redis, RabbitMQ etc.,)

- A background event listener (thread) launched per process which listens to a pub/sub channel onto which events are fired.

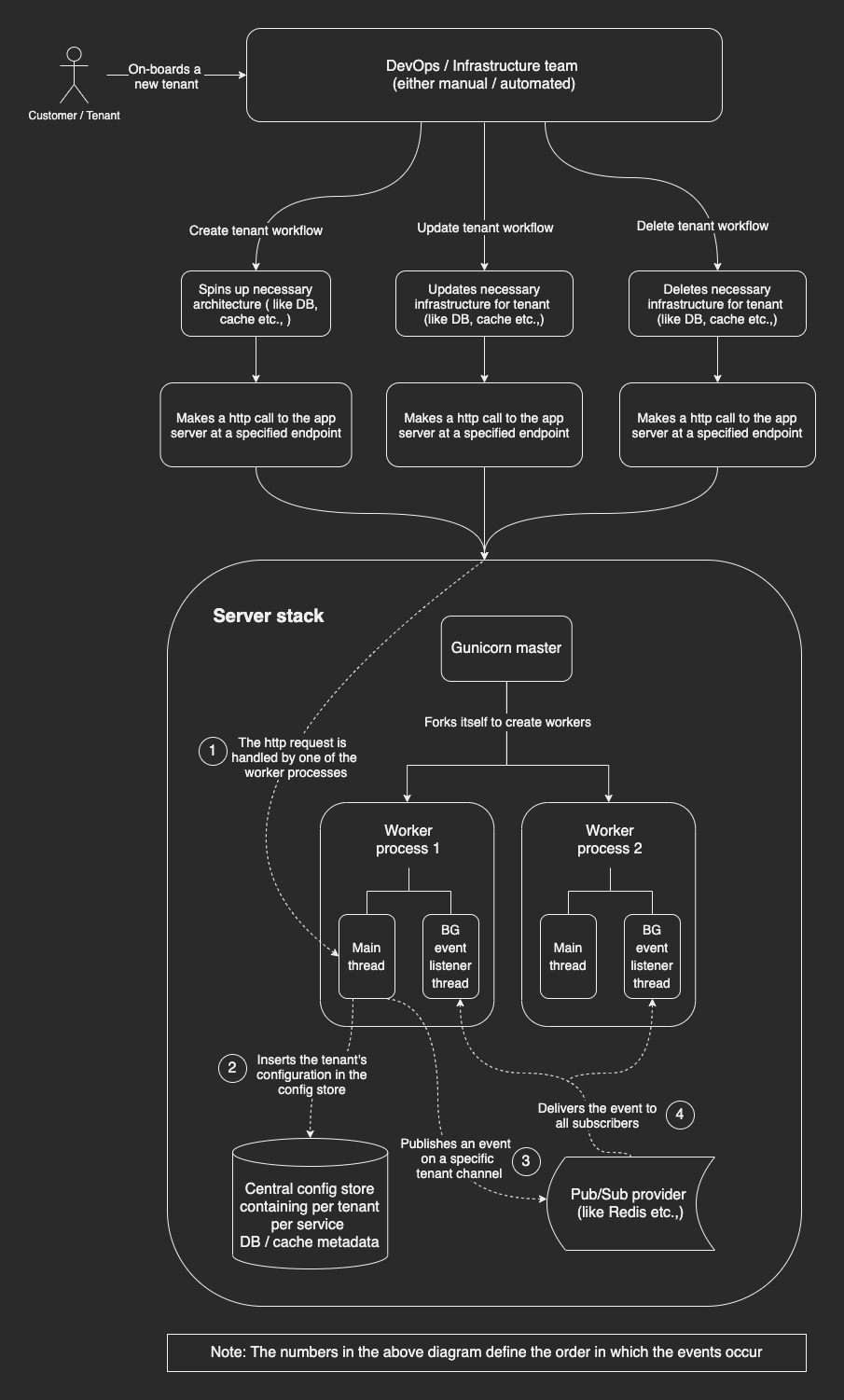

To illustrate better, please look at the architecture diagram below:

Let's see how this solution works in the context of multi-tenancy:

-

When the app server boots, a background thread is launched per process which starts listening to a specific set of channels (like tenant_create, tenant_update etc.,) registered with the Pub/Sub provider (eg: Redis).

-

When a new tenant is on-boarded, a HTTP call is made to the app server. One of the processes receives this request, processes it and subsequently inserts the new tenant configuration into the central KV store. Once done, a

tenant_createevent is fired on the tenant_create channel. -

Now each process, including the publisher, would receive this event in the background thread. A callback would be triggered subsequently which would process the received event.

-

In the scenario of a tenant update or tenant delete, the operational semantics would be quite similar to points 2 and 3 mentioned above.

Important

The elegance of the above solution lies in the fact that irrespective of the no of workers spawned by the server, it would work perfectly well. Owing to this, you can see that it would work perfectly in an auto-scaled containerised environment as well.

Challenges¶

Though the above solution seems fairly straightforward and works well for Django, the following challenges were encountered during actual implementation.

-

Worker type problem: The worker type used by the underlying WSGI server for serving the Django app can vary.

-

For eg: If

gunicornis being used, then the worker type could be any ofsync,gthread,geventetc., -

Also ASGI servers are becoming widely popular as well due to the async paradigm taking centre stage with the Django 3.x releases.

Now the behaviour implemented by the background thread might not work well for all of these worker/server types since they have inherently different operational semantics. In order to solve this, the library receives the worker type being used, as part of the configuration from

settings.pywhich in-turn decides the behaviour of the background thread. To know more about how to configure this setting, click here. -

-

The forking problem: In order to scale and take advantage of the underlying hardware, process managers (like Gunicorn, Celery etc.,) typically spawn multiple worker/child processes using a pre-fork server model. Interestingly there are two variations of this model depending on when the fork actually happens.

-

The master process forks itself first to spawn workers and then loads the Django app in each of the worker processes. This is the default behaviour in WSGI servers like Gunicorn, uWSGI etc.,

-

The master process loads the Django app first and then forks itself. This is the default behaviour in Celery. The same can be achieved in the WSGI servers mentioned above as well by enabling a specific setting. (For eg: In Gunicorn, this can be achieved by setting the

preload_apptoTrue)

For the pub/sub mechanism to work correctly, it is mandatory that a background event listener (thread) be spawned in each of the worker processes to receive and process events.

Assuming that the code for launching the BG event listener (thread) is placed in the ready method of the

tenant_routerapp, it would work fine for the first variant mentioned above but would fail for the second variant since background threads launched in the parent process don't survive a fork and hence won't be copied into the child processes.Also, apart from the pre-fork server model, it is possible to use other methods to launch child/worker processes, though they're not being widely used in practice. More info about this can be found here.

Considering all the above mentioned cases, it is impossible for the library to determine the right place to put code responsible for launching the backgound event listener (thread).

In order to solve this, the library exposes a callback which has to be invoked in every child/worker process once it's spawned. It is the responsibility of the developers to hook this callback in the right place so that it is invoked appropriately.

For example, if gunicorn is being used, then in the gunicorn.conf.py file, place the following code:

# on_worker_init and on_worker_exit are the callbacks exposed by the library # they should be imported and used as follows: from tenant_router.bootstrap import on_worker_init, on_worker_exit ... def post_worker_init(worker): on_worker_init() def worker_exit(server, worker): on_worker_exit()Note

The

on_worker_exitcallback is responsible for gracefully shutting down the background event listener (thread). Although it would be ideal if invoked from an appropriate hook, it is not entirely mandatory in case such a hook is unavailable.Similarly, if uWSGI is being used, then these callbacks could be invoked from the post-fork hook.

If, for some reason, the WSGI server doesn't expose such a hook, the low-level register_at_fork method can be used, though this might not work on some platforms.

Important

If Celery is being used, then the app does not have to do anything as the library already hooks these callbacks to the worker_process_init and worker_process_shutdown signals.

-

Event Queue¶

Since the event reception part has been solved, the next task is to device a scheme to process it. The following scenario illustrates the various challenges involved in designing such a scheme:

Challenges and constraints¶

-

The following points discussed assume the same server (gunicorn) setup mentioned above i.e two threads: one main thread and one background event listener(thread).

-

Assume that a

tenant_updateevent is fired and is received in the background event listener (thread). Assume that a callback is triggered subsequently which performs the following:- Updates the in-memory configuration with the updated DB config from the event payload.

- Invalidates/closes the old DB connection.

Also note, that this callback would get executed in the background event listener (thread).

-

Since there are two threads, there is a thread synchronisation problem that exists inherently due to context switching. Let's look at how this might affect the above two operations:

-

The first operation is atomic in nature and so will not be affected i.e the context switch could occur either before or after the updation but not in between. As a result, this can happen safely in the background event listener (thread).

-

The second operation, however, entails the following sub-cases:

-

Certain ORM libraries, like the Django ORM, maintain thread-local connections. In such cases, the background event listener (thread) has no way to access the connection object that has to be closed/invalidated since it would reside in another thread, in this case, the main thread.

-

Certain ORM libraries, like PyModm/Mongoengine, maintain connections at the process level. To explain why this would create a problem, consider the following scenario:

Let's assume that the main thread is serving a HTTP request, and in the process, makes a DB query using a fresh process-specific connection. Assume that suddenly an event is received by the BG event listener (thread) and a context switch happens. If the callback, triggered by event reception, executes in this thread and invalidates/closes the process-specific connection that the main thread created, then when the context switches back to the main thread, it would suffer from a broken connection error when it uses the same connection to make further DB queries in the same request. As a result, this one specific HTTP request would fail.

-

-

Solution¶

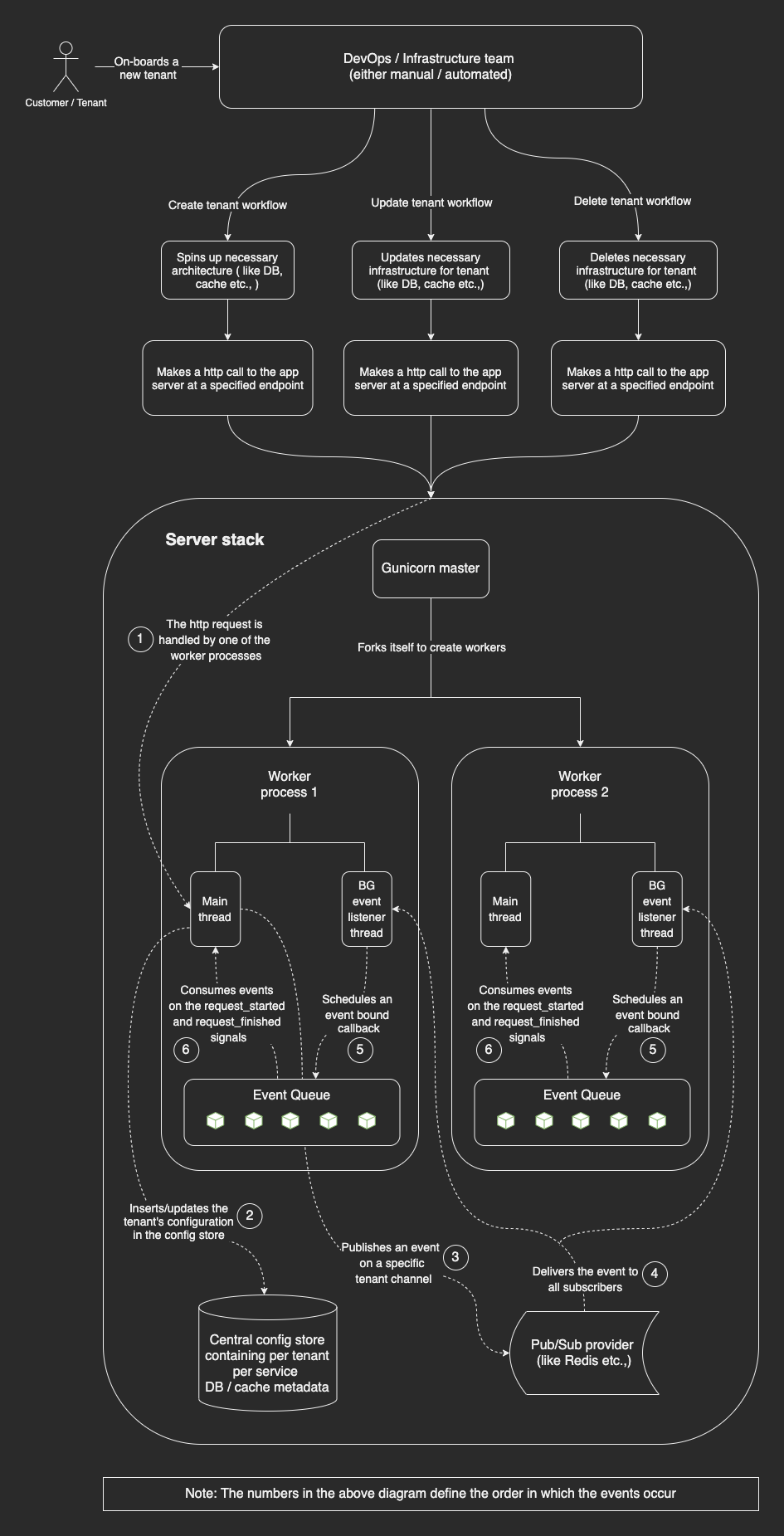

Considering all the above cases, the solution architecture diagram is as below.

To understand better, let's do a walk-through of the solution.

-

Consider that a

tenant_updateevent has been fired and received subsequently in the background event listener (thread). Now the callback which gets invoked in the background thread, rather than processing the event right away, binds the actual callback with the event thereby creating a bound callable that subsequently gets pushed into the central event queue. -

The central event queue gets processed only during the request_started and request_finished signals respectively. This ensures that all callbacks hooked to the

tenant_updateevent get executed on the main thread. As a result, the thread synchronisation problem mentioned earlier is solved as follows:-

Since the callback gets executed on the main thread, it now has access to the thread-local connection object that would have to be invalidated.

-

Since the connection would now be invalidated/closed in the main thread, before the view starts or after the view finishes, the view would never experience a broken connection error.

-

-

The same operational semantics is followed for the other tenant lifecycle events as well.

Usage¶

The following sections describe the behaviour of each of the tenant lifecycle events' and ways by which they can be tapped into from code.

Events¶

ON_TENANT_CREATE- When a new tenant is created, a HTTP

POSTrequest is made to the thetenant/endpoint which in-turn fires this event. Callbacks hooked to this event are invoked before the in-memory configuration is updated. ON_TENANT_UPDATE- When a particular tenant's configuration is updated, a HTTP

PUTrequest is made to thetenant/<tenant_id>endpoint which in-turn fires this event. Callbacks hooked to this event are invoked before the in-memory configuration is updated. ON_TENANT_DELETE- When a tenant is deleted, a HTTP

DELETErequest is made to thetenant/<tenant_id>endpoint which in-turn fires this event. Callbacks hooked to this event are invoked before the in-memory configuration is deleted. POST_TENANT_CREATE- This event is fired immediately after all callbacks hooked to the

on_tenant_createevent have been executed. Callbacks hooked to this event are invoked after the in-memory configuration is updated with the newly created tenant's metadata. POST_TENANT_UPDATE- This event is fired immediately after all callbacks hooked to the

on_tenant_updateevent have been executed. Callbacks hooked to this event are invoked after the in-memory configuration is updated to reflect the latest metadata changes. POST_TENANT_DELETE- This event is fired immediately after all callbacks hooked to the

on_tenant_updateevent have been executed. Callbacks hooked to this event are invoked after the particular tenant's metadata has been deleted from the in-memory configuration.

Example¶

The following code illustrates ways by which the above mentioned events can be tapped into.

from django.apps import AppConfig

from tenant_router.tenant_channel_observer import tenant_channel_observable, TenantLifecycleEvent

class DummyAppConfig(AppConfig):

name = 'tests.dummy_app'

def _on_tenant_create(self, event):

# some code

pass

def _on_tenant_update(self, event):

# some code

pass

def _on_tenant_delete(self, event):

# some code

pass

def _post_tenant_create(self, event):

# some code

pass

def _post_tenant_update(self, event):

# some code

pass

def _post_tenant_delete(self, event):

# some code

pass

def ready(self):

tenant_channel_observable.subscribe(

lifecycle_event=TenantLifecycleEvent.ON_TENANT_CREATE,

callback=self._on_tenant_create

)

tenant_channel_observable.subscribe(

lifecycle_event=TenantLifecycleEvent.ON_TENANT_UPDATE,

callback=self._on_tenant_update

)

tenant_channel_observable.subscribe(

lifecycle_event=TenantLifecycleEvent.ON_TENANT_DELETE,

callback=self._on_tenant_delete

)

tenant_channel_observable.subscribe(

lifecycle_event=TenantLifecycleEvent.POST_TENANT_CREATE,

callback=self._post_tenant_create

)

tenant_channel_observable.subscribe(

lifecycle_event=TenantLifecycleEvent.POST_TENANT_UPDATE,

callback=self._post_tenant_update

)

tenant_channel_observable.subscribe(

lifecycle_event=TenantLifecycleEvent.POST_TENANT_DELETE,

callback=self._post_tenant_delete

)